MOST: Memory Oversubscription-Aware Scheduling for Tensor Migration on GPU Unified Storage

Abstract

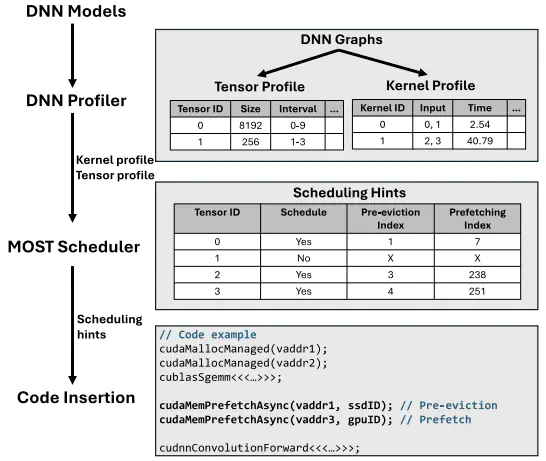

Deep Neural Network (DNN) training demands large memory capacities that exceed the limits of current GPU onboard memory. Expanding GPU memory with SSDs is a cost-effective approach. However, the low bandwidth of SSDs introduces severe performance bottlenecks in data management, particularly for Unified Virtual Memory (UVM)-based systems. The default on-demand migration mechanism in UVM causes frequent page faults and stalls, exacerbated by memory oversubscription and eviction processes along the critical path. To address these challenges, this paper proposes Memory Oversubscription-aware Scheduling for Tensor Migration (MOST), a software framework designed to improve data migration in UVM environments. MOST profiles memory access behavior and quantifies the impact of memory oversubscription stalls and schedules tensor migrations to minimize overall training time. With the profiling results, MOST executes newly designed pre-eviction and prefetching instructions within DNN kernel code. MOST effectively selects and migrates tensors that can mitigate memory oversubscription stalls, thus reducing training time. Our evaluation shows that MOST achieves an average speedup of 22.9% and 12.8% over state-of-the-art techniques, DeepUM and G10, respectively.